Apache Kafka 🔥 Your Gateway to High-Demand Jobs - Sketech #22

A Hands-On Introduction to Event Streaming. Visual Guide for Beginners

Hey there! Nina here. Welcome to the free edition of the Sketech Newsletter!

Each week, you’ll get bold, engaging visuals and insights that make complex software engineering concepts click. This time, we’re exploring Apache Kafka and how it revolutionizes real-time data processing.

Apache Kafka is a powerful tool for real-time data streaming. If you’re new to Kafka, this guide will walk you through the essentials of how it works, why it’s useful, and how to set it up. Let’s break down Kafka in the simplest way possible.

1. What is Apache Kafka?

At its core, Kafka is a distributed event streaming platform. It allows you to publish and consume streams of records in real time. Kafka is designed to handle high volumes of data with minimal delay, making it a great fit for systems that need real-time data processing.

2. Kafka Key Concepts

Before diving into configuration, let’s cover the key concepts you need to understand:

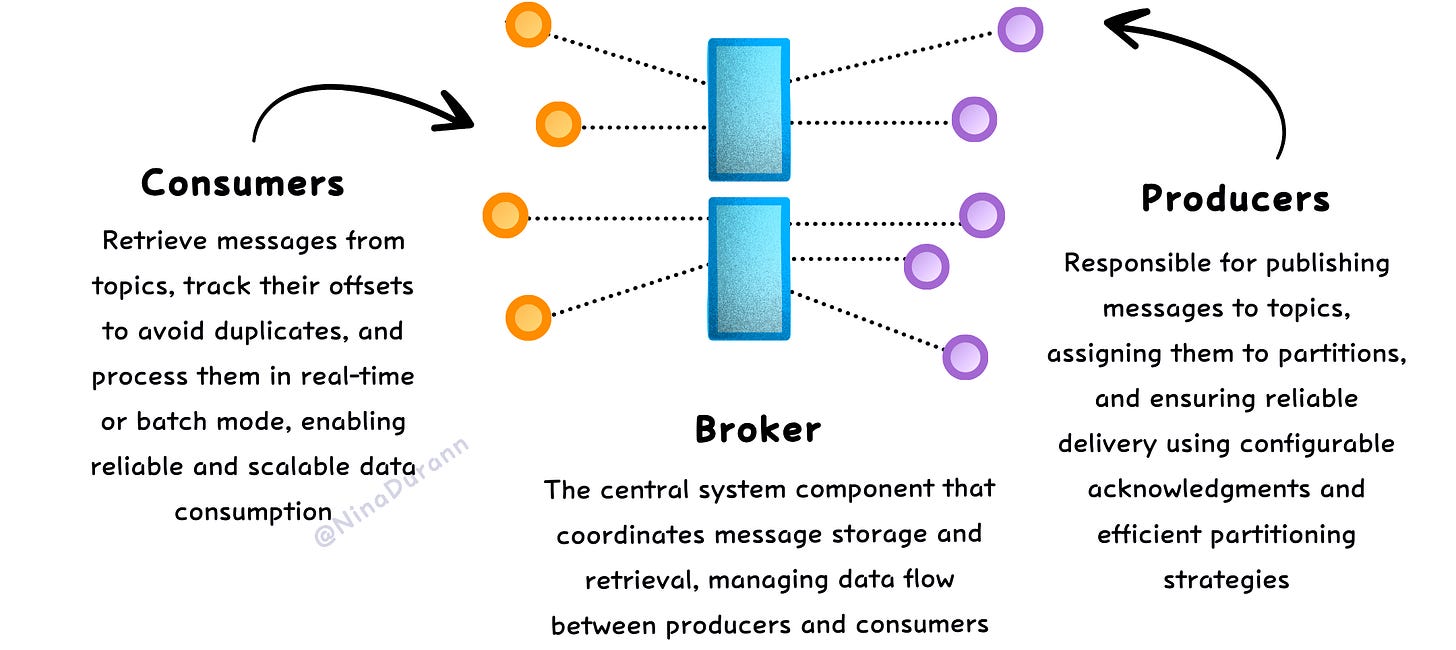

Producer: The component that writes data (messages) to Kafka.

Consumer: The component that reads data from Kafka.

Topic: A category or channel to which records are written. Producers send messages to topics, and consumers read from them.

Broker: Kafka runs as a cluster of brokers. A broker is a server that stores and serves data. Kafka can scale by adding more brokers to the cluster.

Partition: Each topic is split into partitions. Each partition stores a subset of the topic’s records, and Kafka ensures data is distributed across brokers.

3. Kafka vs. Traditional Messaging Systems

Kafka isn’t just a messaging system. It’s a distributed commit log. This means:

Kafka doesn’t just pass messages around. It stores them for long periods, allowing consumers to read them whenever they want.

It’s optimized for high throughput and fault tolerance. Kafka can handle massive amounts of data and keep it safe, even if some servers fail.

4. Do You Need ZooKeeper?

Traditionally, Kafka used ZooKeeper to manage its cluster. However, Kafka has evolved, and now with KRaft (Kafka Raft), you don’t need ZooKeeper anymore.

ZooKeeper is a tool used to manage distributed systems, but Kafka is moving towards an internal management system, simplifying the setup.

If you are starting fresh with Kafka, KRaft is the way to go. It reduces complexity and allows Kafka to manage everything internally.

5. Setting Up Kafka (No ZooKeeper)

Download Kafka:

Go to the official Kafka download page and get the latest version.Start Kafka in KRaft Mode:

Kafka comes with a simple configuration. Set up Kafka to work without ZooKeeper by editing theserver.propertiesfile.

properties

process.roles=broker,controller

node.id=1

controller.quorum.voters=1@localhost:9093

inter.broker.listener.name=PLAINTEXT

listeners=PLAINTEXT://localhost:9092

log.dirs=/tmp/kafka-logsRun Kafka:

Start Kafka using the following command:

bash

bin/kafka-server-start.sh config/server.propertiesThis will start your Kafka broker and controller without needing ZooKeeper.

6. Creating Topics and Sending Data

Now that Kafka is running, let’s create a topic and send some data.

Create a Topic:

Use Kafka's command line to create a new topic:

bash

bin/kafka-topics.sh --create --topic my-topic --bootstrap-server localhost:9092 --partitions 1 --replication-factor 1Produce Data:

Use the producer to send messages to your topic:

bash

bin/kafka-console-producer.sh --topic my-topic --bootstrap-server localhost:9092After running this, type messages directly into the terminal. Each line will be sent as a record to Kafka.

Consume Data:

Now, let’s read the data from Kafka:

bash

bin/kafka-console-consumer.sh --topic my-topic --bootstrap-server localhost:9092 --from-beginningYou’ll see the messages you produced earlier appear in the terminal.

7. Kafka Use Cases

Here are some examples of where Kafka shines:

Real-Time Analytics: Kafka is perfect for handling data streams from sources like IoT devices, logs, or web traffic and processing them in real time.

Event-Driven Architecture: You can build microservices that communicate via events. Kafka is used to decouple services, making systems more modular and scalable.

Data Pipelines: Kafka is a great backbone for moving data between systems in real time. It's commonly used to aggregate and process data from multiple sources.

We want to keep Sketech free for our subscribers. Drop a ❤️ to make it happen!

8. Best Practices for Beginners

Start Small:

Don’t overwhelm yourself. Start with simple producers and consumers. Focus on understanding how messages flow through Kafka.Monitor Your Cluster:

Use tools like Kafka Manager or Prometheus to keep an eye on Kafka’s health. Monitoring is essential to prevent issues from snowballing.Understand Partitioning:

Partitions are key to Kafka's scalability. Understand how they work and how to balance them for optimal performance.Handle Failures Gracefully:

Kafka is designed to be fault-tolerant, but you still need to think about replication and ensuring your consumers can handle errors without crashing.

9. Boost Your Career with Kafka Skills

Learning Apache Kafka opens up many opportunities in the world of data engineering and real-time systems. By mastering Kafka, you'll be equipped to design scalable data pipelines, build event-driven architectures, and improve the performance of systems that rely on real-time data.

Career Opportunities: With Kafka skills, you can pursue roles such as Data Engineer, Stream Processing Engineer, Kafka Administrator, or Solutions Architect. Kafka is highly valued in industries like finance, e-commerce, healthcare, and tech, where large amounts of data need to be processed quickly and reliably.

Salary Expectations: Depending on your location and experience, salaries for Kafka-related roles range from $80,000 to $130,000 per year. Senior positions or roles involving advanced data architecture can push these numbers even higher.

Certifications: To validate your Kafka expertise, consider certifications like the Confluent Certified Developer for Apache Kafka or Confluent Certified Administrator. These certifications can help you stand out in a competitive job market and demonstrate your skills to potential employers.

And that’s a wrap for this edition of Sketech! It’s always inspiring to see how these ideas resonate with you and spark new perspectives. Your curiosity and engagement keep this journey going, and I’m truly grateful for it.

If you found value in this edition, drop a ❤️! Your feedback means the world to me.

Until next time,

Nina

Sketech Newsletter

Crafted to make concepts unforgettable

We want to keep Sketech free for all our subscribers. Drop a ❤️ to help make it happen!

Excited to keep sharing valuable content with you! ⭐