Sketech #12 Last Week's Insights: SQL Tips, Deployment Strategies, API Limits & Microservices 𐓏 My Take on Optimizing Performance

Smart Moves for Smoother Systems

Hey! Nina here. Welcome to Sketech Free Edition, where you’ll find visuals and insights to make learning technical ideas simple and unforgettable.

Every week, I share concepts and visuals that simplify complex topics and make them easy to understand. This edition brings together some of the ideas I’ve explored recently—ideas that sparked interesting discussions and brought clarity to tricky concepts.

Here’s what’s inside:

SQL Query Execution Order – Understand the real sequence of query processing to write cleaner and faster SQL.

5 Deployment Strategies – Learn which strategy fits your project to ensure smooth and reliable releases.

API Rate Limiting – Keeping Systems Stable and Users Happy 😊 (Redesigned Visual)

Microservices Architecture – Explore how a well-structured microservices approach can enhance system performance and scalability (Redesigned Visual)

Hope you enjoy, and let me know which one resonates with you the most!

SQL Query Execution Order

( Understand the real sequence of query processing to write cleaner and faster SQL )

The order in which SQL queries are executed differs from the way they are written. Understanding this distinction is important because it can have a direct impact on performance and the results you get.

Execution Flow

FROM: Selects tables and joins them.

WHERE: Filters rows based on conditions.

GROUP BY: Groups rows for aggregation.

HAVING: Filters groups after aggregation.

SELECT: Chooses the columns to return.

DISTINCT: Removes duplicates (if needed).

ORDER BY: Sorts the result set.

LIMIT/OFFSET: Limits the number of rows returned.

Why It Matters

Write more efficient queries by filtering early and reducing unnecessary data processing.

Avoid performance issues by understanding when filtering, aggregation, and sorting occur.

Tips for Optimization

Avoid SELECT All: Specify only the columns you need.

Use Indexes: Ensure WHERE, JOIN, and ORDER BY columns are indexed.

Limit OFFSET: Use keyset pagination for better performance with large datasets.

Tools to Optimize Queries

Execution Plan: Check the execution plan in SSMS to find inefficiencies like missing indexes or bad joins.

SQL Server Profiler: Trace query performance to find slow queries and bottlenecks.

Indexes: SQL Server can suggest missing indexes to improve query speed.

If you’re looking to improve the performance of your SQL queries, start by keeping the execution order in mind. Small adjustments can lead to big improvements in efficiency.

5 Deployment Strategies You Need to Know

When it comes to deployment, the strategy you choose can make a huge difference in how smoothly your updates are rolled out and how effectively you manage risk.

Here are a few deployment patterns I’ve found particularly useful:

1/ Canary: Gradually shifting traffic to a new version allows you to catch issues early without affecting all users. It’s a safe and steady approach.

↳ If you have 100 users, start by routing 10% to the new version, monitoring for any issues before gradually increasing to 50%, then 100%.

2/ Blue-Green: Having two identical environments gives you peace of mind. If something goes wrong, switching back is simple and quick.

↳ Maintain a "blue" production environment running the current version and a "green" environment with the new version. If the green environment passes tests, switch all traffic to it. If problems occur, instantly revert to blue.

3/ Rolling: I like this one for its practicality. It updates nodes incrementally, so there’s no downtime and minimal impact on users.

↳ In a cluster of 10 servers, update 2 servers at a time. As each pair is updated and verified, move to the next pair, ensuring continuous service availability.

4/ Feature Toggles: Perfect for testing features in production without full exposure. It adds flexibility and helps avoid big-bang rollouts.

↳ Implement a configuration flag that enables a new feature only for specific user segments or internal testers, allowing controlled exposure and easy disabling if needed.

5/ A-B Testing: This is invaluable when you want real-world data to validate changes. Comparing versions live with users is both insightful and efficient.

↳ Split your user base 50/50, with one group experiencing the current interface and another experiencing the new design, then collect metrics on user engagement, conversion rates, etc.

Each of these patterns has its place, and I think the key is understanding when to apply them based on your specific needs and infrastructure.

Want to see the reactions? Head to the LinkedIn post

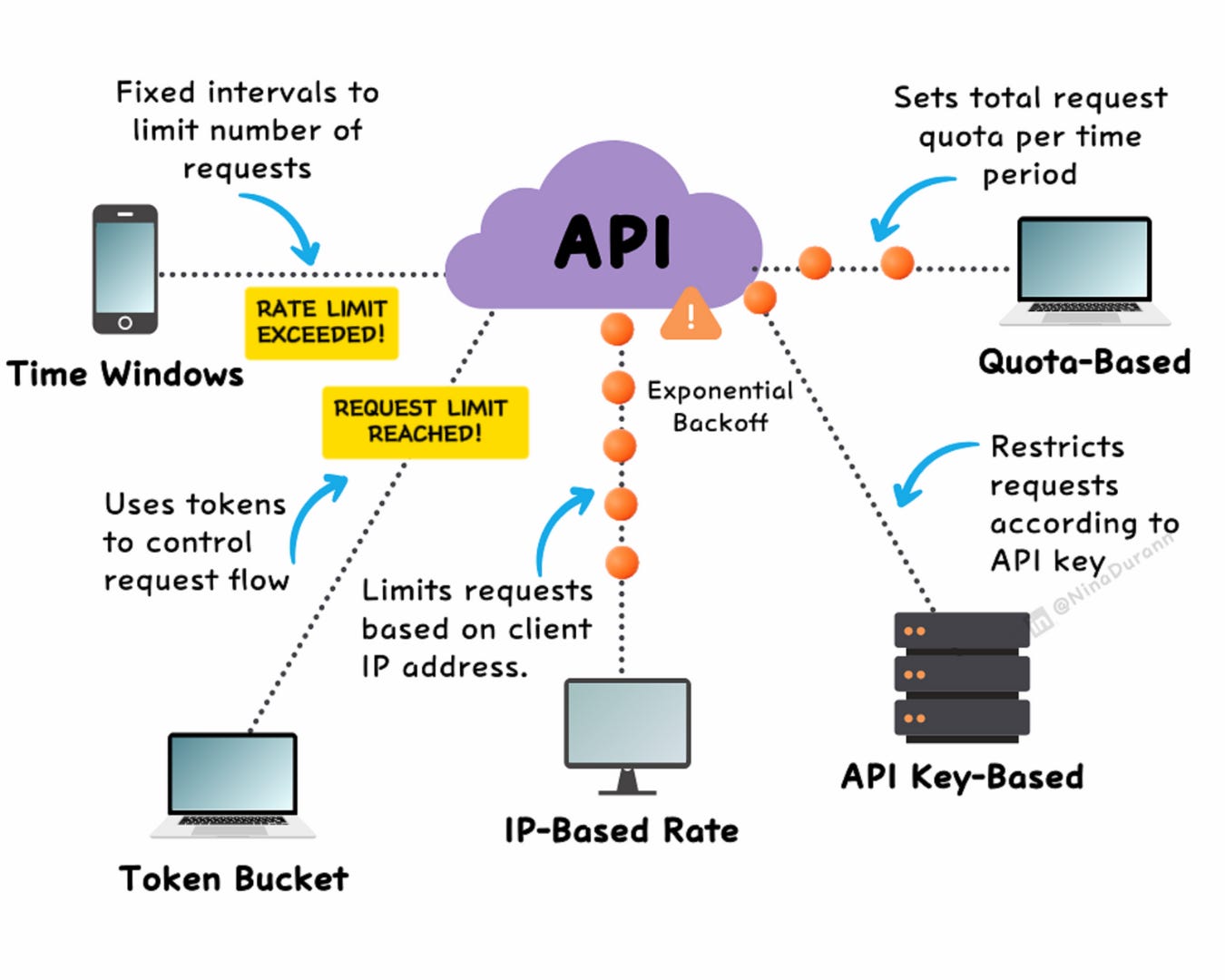

API Rate Limiting

(Keeping Systems Stable and Users Happy )

Rate limiting is like a traffic signal for your API. It ensures smooth traffic flow, protects against overload, and guarantees a consistent experience for users. When faced with traffic spikes or malicious attacks, rate limiting acts as a safeguard, keeping your API stable and fair for everyone.

Why Rate Limiting Matters

↳ Protects Your Infrastructure: By capping request rates, you prevent your servers from being overwhelmed during high demand.

↳ Shields Against Abuse: Rate limiting stops bad actors, like bots or attackers, from flooding your system with requests.

↳ Enhances User Trust: Even during peak times, users experience consistent performance, building reliability into your service.

Popular Rate Limiting Techniques

↳ Time Window: Limits requests within a fixed timeframe (e.g., 100 per minute). ↳ Token-Based: Users consume tokens for requests, replenished over time, allowing bursts but enforcing limits.

↳ IP-Based: Restricts requests from a single IP to prevent abuse. ↳ API Key-Based: Sets limits per API key, allowing tiered usage for different users or apps. ↳ Quota-Based: Allocates a total request allowance over a period (e.g., monthly limits).

Avoiding Mistakes in Rate Limiting

• Ignoring User Segmentation: Treating all users equally can frustrate paying customers. Tailor limits to tiers or plans.

• Unclear Error Feedback: Replace generic messages with actionable responses, e.g., "Rate limit exceeded. Try again in 10 minutes."

• Lack of Backoff Mechanisms: Gradually reduce request rates under heavy load instead of abruptly rejecting requests.

Implementing Rate Limiting

• Nginx or Apache: Apply server-side limits with configurable rules.

• Redis: Leverage it for tracking usage and enforcing limits in real time.

• Cloud Services: Tools like AWS API Gateway simplify implementation for cloud-hosted APIs.

When done right, rate limiting is more than a safeguard, it’s an enabler for scalability and trust. It ensures your service is ready to handle growth while protecting the experience for all users.

Check out the LinkedIn post to see the full discussion

Microservices Architecture

(The Big Picture ⭐ )

Here’s a quick overview of the basic architecture and key principles behind:

Microservices architecture structures applications as small, independent services. Each service operates autonomously and is designed to:

→ Run in its own process without dependency on others.

→ Communicate via protocols such as HTTP/REST, gRPC or message queues like AMQP.

→ Be deployed, scaled, and updated independently.

Advantages of Microservices

• Scalability: Individual services can scale based on their specific demands.

• Flexibility: Each service can use the most suitable technology stack for its needs.

• Resilience: Failures in one service do not disrupt the entire system.

Challenges to Consider

• Increased Complexity: Managing numerous services adds operational overhead.

• Data Consistency Issues: Keeping data synchronized across distributed services can be difficult.

• Monitoring Requirements: Effective real-time monitoring helps ensure service health by providing timely insights into potential issues.

Best Practices for Adopting Microservices

1/ Define Services Clearly: Break down your application’s functions into distinct, manageable services.

2/ Design Communication Wisely: Choose efficient inter-service communication protocols, such as REST or gRPC.

3/ Decouple Databases: Use independent databases per service to avoid tight coupling.

4/ Automate Deployments: Set up CI/CD pipelines to streamline integration and deployment processes.

Switching to microservices didn’t solve everything overnight, but it made deployments manageable and gave us the confidence to scale with greater ease and stability.

And with that, we’ve reached the end of Sketech Edition #12 ! This week has been amazing on social media, and the way you've responded to the visuals has been incredibly motivating. Thanks for joining me, see you in the next edition!

Nina

Sketech Newsletter Crafted to make concepts unforgettable 🩵